Artificial Intelligence is Contributing to the Spread of Misinformation

The Rise of AI

Claiming to make our lives easier, the rise of Artificial Intelligence has opened unimaginable doors. From face recognition to enhanced drug development, the advances in tech are limitless. While AI has begun to revolutionize how we live our everyday lives, convenience is a small price to pay for the potential damage that AI poses on our present and future.

Due to its clickable nature, fake news thrives on social media platforms. While some are harmless, several instances have had devastating repercussions. One instance, in particular, is the rise of “deepfake technology”. A deepfake is a form of artificial intelligence that uses digital software to create convincing photo, video, and audio hoaxes. While manipulating video is not a new concept, this 21st-century Photoshop method is challenging to spot and is continually weaponized in modern media.

With the upcoming 2024 elections, experts are worried that this type of technology will be used to weaponize politicians and mislead voters based on false information, Associated Press reports.

However, this isn’t the first time AI has been used to incite violence and mislead voters. Earlier this year, the Official Republican National Committee created a 30-second ad campaign generated entirely with AI. While this type of campaign uses a fear tactic to sway voters, all of the scenarios within the video were artificially generated using unfamiliar AI technology by the Republican Party.

AI Contributing to Media Distrust

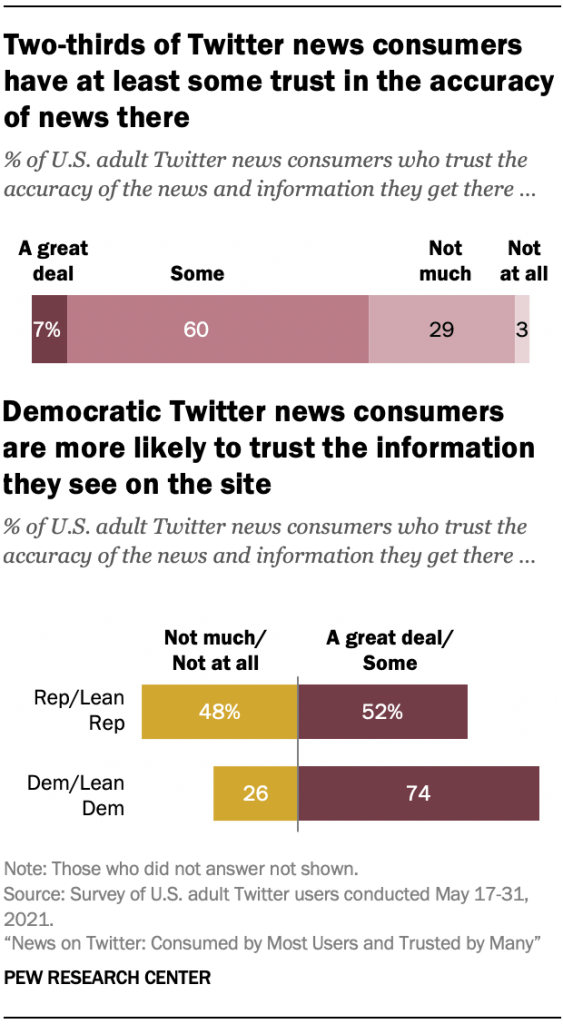

As AI continues to develop, the line between what is real and what is fake has become blurred. Since the beginning of the news media, viewers have relied heavily on video and audio evidence as a bedrock of truth. The issue arises within the premise that most US adults now rely on social media as a news source.

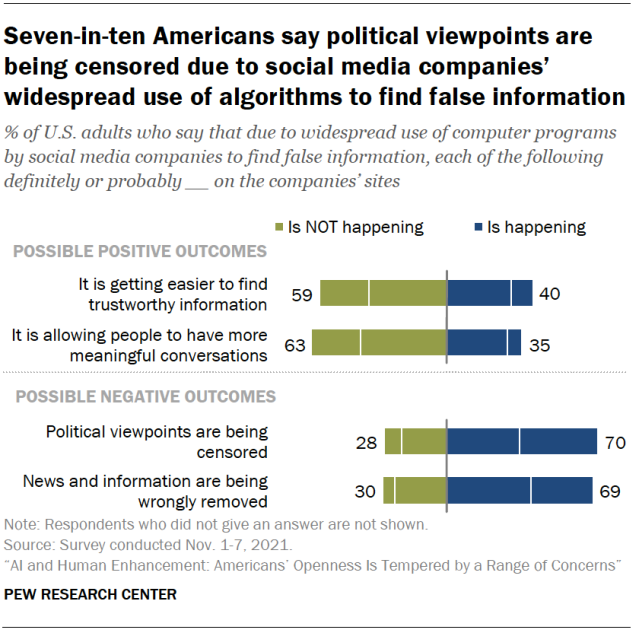

This progression of hyperrealistic AI has created mistrust within the general media. Reluctant to double-check news sources, misinformation is everywhere, and it is getting progressively more challenging to spot. While some social media platforms use AI detection to filter false information, it is not always perfect. In fact, according to the Pew Research Center, 7 out of 10 Americans say that as a result of these social media filters, unreasonable censorship is now rampant, along with the promotion of false information still not under control.

On social media, “shadowbanning” is becoming another consequence of this new technology. Shadowbanning is the practice of a social media company’s AI algorithms preventing a user's content from being seen by others without notifying the user. With the use of AI, Instagram users are now accusing Meta of censoring and shadowbanning posts related to Palestinian viewpoints amidst the Gaza conflict.

Meta stated in a blog post that “it is never our intention to suppress a particular community or point of view.” Additionally, Meta also acknowledged that its policies in censorship are not perfect every time.

Social media within the last several weeks has been flooded with numerous posts displaying coverage of the recent events happening within the Gaza Strip. Due to many Palestinian users having their posts censored based solely on their beliefs, Meta companies like Facebook, Instagram, and Threads have been overwhelmed with one-sided information regarding a deep-rooted and complex historical topic.

Considering the Israeli-Palestinian conflict is such an emotionally charged topic, many Palestinians feel they have no space to share their beliefs on social media without fear of censorship or violence.

In addition to sitewide censorship due to the fault of artificial intelligence, Meta came under fire again after some users documented a glitch that translated “Palestinian” followed by the Arabic phrase “Praise be to Allah” to “Palestinian terrorists” on multiple accounts. These faults led to several strikes and banning accounts, censoring Palestinian voices. Deborah Brown, a senior researcher and advocate on digital rights at campaign group Human Rights Watch, stated in an interview with Wired that this censorship on social media “seriously hinders the ability of journalists and human rights monitors to document mounting abuses [on the Gaza strip].” The censorship of media coming out of Gaza affects not only those absorbing the information but those living in it as well.

“I don’t have the truth anymore; I have to watch it happen through the lens of Western media,” says a Chapman Palestinian student, who requested to stay anonymous. Without proper education, “People who had no knowledge of the last 70+ years clung to the first thing that the Western news pushed out to them.” Following Gaza's internet blackouts, my interviewee feels they have no other choice than to turn to independent journalists who haven’t been censored, such as Motaz Azaiza, to stay informed and educated about the atrocities happening within Gaza. “I fear that Palestinians are being killed in the dark,” and for the sake of others, following threats they have experienced on Chapman campus, they will continue to use their voice to educate others.

“I fear that Palestinians are being killed in the dark.”

Misinformation regarding the Israeli-Palestinian conflict is pervasive. In the sea of content, it is essential to double-check sources and not blindly believe everything posted on the internet or within news outlets, regardless of any side or bias.

UK SUMMIT FINDINGS

Today, delegates from 28 nations, including the United States and China, met at The United Kingdom’s AI Safety Summit to discuss the potential harm of artificial intelligence. Many of the representatives pushed for possible legislation and signed an agreement that the United States, European Union, and China would work together to manage the imminent risk from artificial intelligence.

Kamala Harris stated in her speech at the summit, “President Biden and I believe that all leaders have a moral, ethical, and social duty to make sure that AI is adopted and advanced in a way that protects the public from potential harm and ensures that everyone is able to enjoy its benefits.”

As AI continues to develop, it is essential to remember that we live in a world of misinformation. While artificial intelligence has claimed to make our lives easier, it is not the answer to our problems and often causes more harm than good. Right now, legislation is being formed to combat the dangers of AI, but experts are still determining the long-term risks and consequences.

Sources:

https://www.theguardian.com/technology/2023/oct/09/x-twitter-elon-musk-disinformation-israel-hamas

https://www.nytimes.com/2023/10/16/world/middleeast/israel-hamas-war-social-media.html

https://www.theguardian.com/technology/2023/oct/18/instagram-palestine-posts-censorship-accusations

https://www.wired.com/story/palestinians-claim-social-media-censorship-is-endangering-lives/